A/B testing is something you need to be doing right now—especially when small improvements of say 1% or 2% can produce impressive revenue improvements down the line.

What Is A/B testing?

Before we get into the specifics of an amazing A/B testing framework, let’s just take a step back and ensure we are all on the same page when talking about A/B testing in general. A/B testing in digital marketing and conversion optimization, also spelled as AB testing, is the process of testing two versions of a webpage or app with controlled differences.

The pages are presented to users randomly. As performance data is collected, the pages are analyzed to determine which version performs better.

What Are the Benefits of A/B Testing?

Testing variations of a website or app with statistical rigor allows you to measure and optimize your page to ensure you are maximizing conversions. Whether you’re testing a change to the header or just the color of a button, don’t rely on theory to guide these decisions, test it and allow the data to drive decision making.

Let’s do some quick math to paint a picture of the impact it can have.

Imagine your product sells at $100 and you have 10,000 visits a month to your webpage. 3% of them convert, generating $30,000 in revenue for that one product.

You A/B test some changes to the page, and your test shows you that your new page version improves your conversion rate by 3% (so now you’re converting 3.09% of visitors). Less than 0.1% additional conversions doesn’t sound like much, but you’ve just generated an additional $900 a month in revenue. Even if you stop testing at that point, you’ve added $10,800 annual revenue. If you keep testing, and test two more times with the same result of a 3% improvement in conversion rate, your conversion rate goes up by 9.3% (1.03 * 1.03 * 1.03). It’s a lot like compounding interest. Your first month at the new conversion rate will have an incremental conversion value of $2,782. Over a year, this will result in an incremental $33,382.

In this particular example, the testing led to more than a month’s worth of incremental revenue.

Please note that this is done with the traffic remaining constant. You didn’t invest into higher volume of ads, more content distribution emails, site-wide SEO, or anything else that would grow your traffic. You’re just getting more value out of the traffic volume you’re already getting. You’re optimizing for $-value of each visitor.

4 Types of A/B Tests That Deliver Results

There are numerous metrics and even areas of data to explore. Here are 3 ways we can employ split testing to ensure we have the best conversion rates possible.

Pricing Experiments

There used to be a marketing theory that prices ending in 7 actually converted better than any other number. Why? Because having a price that finishes with 0 (zero) has been proven to be too general, not specific enough, 9 became commonly used. Then prices ending in 9 became so common that it doesn’t stir up a notable positive response anymore, and doesn’t impact a conversion rate. So why do you think you’re seeing prices ending in 7 now?

The 7 was originally proven with an A/B test, where pricing was tested to find the best optimization point. That is what the data suggested, and you can still see it in some SaaS pricing.

This may not be the right recommendation for your website and your audience. It’s a good idea to run your own tests, and find the number that best converts for you in particular. Use best practice and industry knowledge to guide what you try, but let your own A/B testing data drive the decision.

On a side note, one related use case for AB testing is optimizing for drop-off steps along the check-out funnel. We love using Amplitude Experiment for that.

Color Theory

Another example of testing A/B versions of a website or specific page, is the color of the CTA.

Yellow was a popular favorite for a long time. Again, thanks to A B testing, data showed that yellow buttons converted better. Since then, the industry has tested colors more.

Data from more in-depth testing suggests that it’s more related to the contrast between background and CTA. Not necessarily yellow just how eye catching the CTA is within the context of the page. But, it’s always a good idea to test variations and see what works for your specific CTA in the context of your specific branding.

Mouse Tracking

Something as simple as tracking the journey of the user on a webpage can lead to great actionable CRO data. Where do visitors focus their time and attention? Where do they get to on the page when they decide to leave?

There are a variety of mouse tracking and heat map software that show you where exactly on the page your user spends most of their time, and where they drop off. By testing the user’s interaction with your website in this way, you can allow the data to determine feature layouts, CTA placements, etc.

With this type of knowledge, you are able to make educated decisions to design your tests and measure the results of those changes.

Heuristic Analysis

It’s not enough to design a good looking web page or app; the page also has to persuade the audience to complete the desired action.

Heuristics are empirical rules of thumb or best practices that have been tested in the past and are implemented to produce UX designs. However, each page, product and service is unique. Rather than just ensuring your page fits with industry best practice, i.e. best practice that comes from somebody else’s testing, it makes sense to test elements such as your call to action placement and conversion rates in real time. Let the design of your website be data driven.

The Importance of Using an A/B Testing Framework

You can’t just rush into A/B testing. A haphazard approach will lead to failure.

If you’re like most companies, you only have so many resources and so much money, so you have to learn to prioritize — sometimes ruthlessly. If you don’t, your growth will eventually stagnate, or you’ll start shrinking. A/B testing is the fastest, easiest, and most cost-effective way to discover how to drive more traffic, generate more leads, create more sales. If you want to succeed with A/B testing, you need to develop a strategy and priorities ahead of time—which can be tricky to do.

Here at McGaw.io, we’ve designed something we call the VICE A/B testing framework. Using this framework, we’re able to avoid a lot of the common A/B testing failures that are caused by lack of strategy and organization.

In this post, we’re going to go through the VICE framework and break down exactly how it works. We’ll explore how you can use the VICE approach to maximize your goals (like revenue or user sign ups) without having to spend more money on driving traffic. By the end, you’ll understand how to use this framework in your own business so that you can benefit from all that A/B testing has to offer.

The Importance of Creating Hypotheses

If you take a look at the McGaw.io blog, you’ll see that we’ve already covered the basics of how you can get started with A/B testing, like what to test and what tools to use.

The focus of this post is to explore how you can conduct A/B tests that are more likely to produce beneficial results—and the key to achieving that is our VICE Framework and Template for A/B Testing.

Before you dive into any A/B testing, you first need to form some hypotheses.

Hypotheses are essentially what you think will happen as a result of making certain changes.

When using the VICE Framework, we leverage hypotheses as our starting point. Generating hypotheses is important because without them, your experiments will lack direction. It’s a good idea to come up with a wide range of hypotheses ahead of time. Having a variety of hypotheses lined up will improve your ability to quickly implement tests in the future, increasing the rate at which you can raise website conversions.

You should create at least one hypothesis for each element of a page you want to test.

Pro Tip: Refrain from A/B testing more than one element of a page at any given time. Running overlapping A/B tests will create confusing data and poor results.

Pro Tip: Refrain from A/B testing more than one element of a page at any given time. Running overlapping A/B tests will create confusing data and poor results.

Testing multiple overlapping variations is a “multivariate test,” which is a different sort of test design than a typical A/B split test.

Pro Tip: Though it can be helpful to have some background knowledge regarding what works well when running tests, you don’t always have to follow best practice. In some cases, you may find that making radical changes and going against best practice actually leads to better conversions.

The VICE A/B Testing Framework

When it comes to A/B testing, it’s important to get some quick wins under your belt.

When you take care of low hanging fruit, you’re in a better position to spend time on the more challenging experiments you want to dig into. Plus, the sooner you have some winning A/B tests, the more you’ll gain from the compounding improvements that come with improving conversions.

Pro Tip: If you’re doing client work, generating quick wins can be even more important, since it gives you the opportunity to prove that A/B testing is worth focusing on.

With that in mind, how can you identify the low hanging fruit and determine the experiments or hypotheses that are most likely to work?

Sure, a crystal ball might be helpful here, but odds are you don’t have one.

Nevertheless, here at McGaw.io, we think we have the next best thing—the VICE approach.

VICE stands for:

V – Velocity

I – Impact

C – Confidence

E – Ease

The idea here is that you score your hypotheses from 0–10 (10 being highest/most preferable), in relation to each of the above factors.

You then tally up these scores so that you have a “total.”

Get the VICE Framework template here!

This total helps work out the likelihood of a hypothesis or experiment producing results when compared to all of the other tests you want to run on a site or page. If you’re looking to generate some quick wins, it can be helpful to prioritize the tests that have the highest scores.

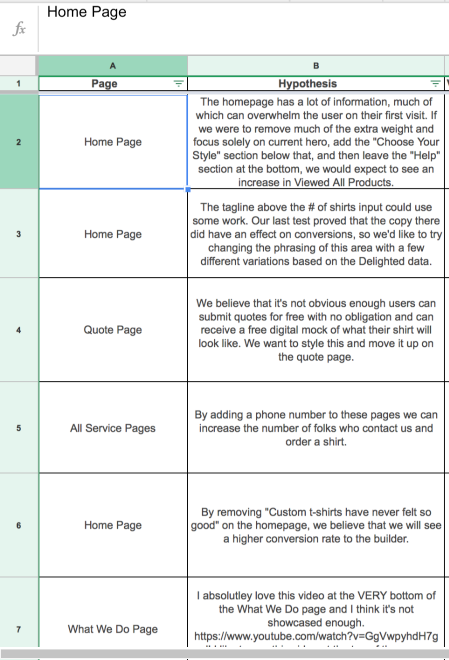

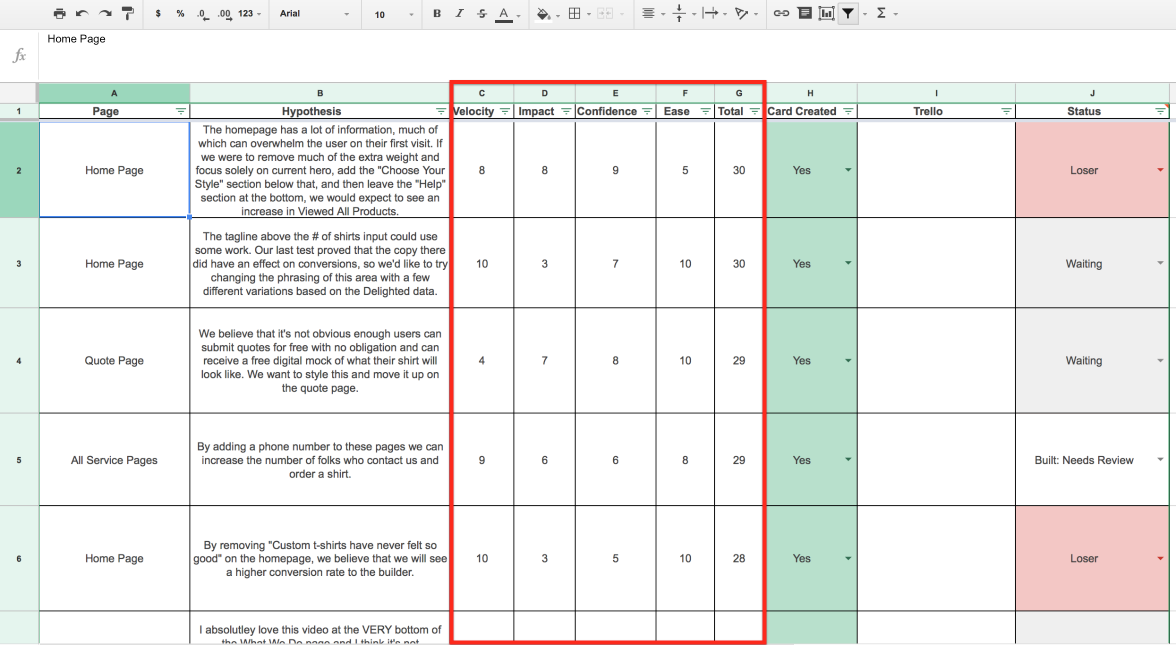

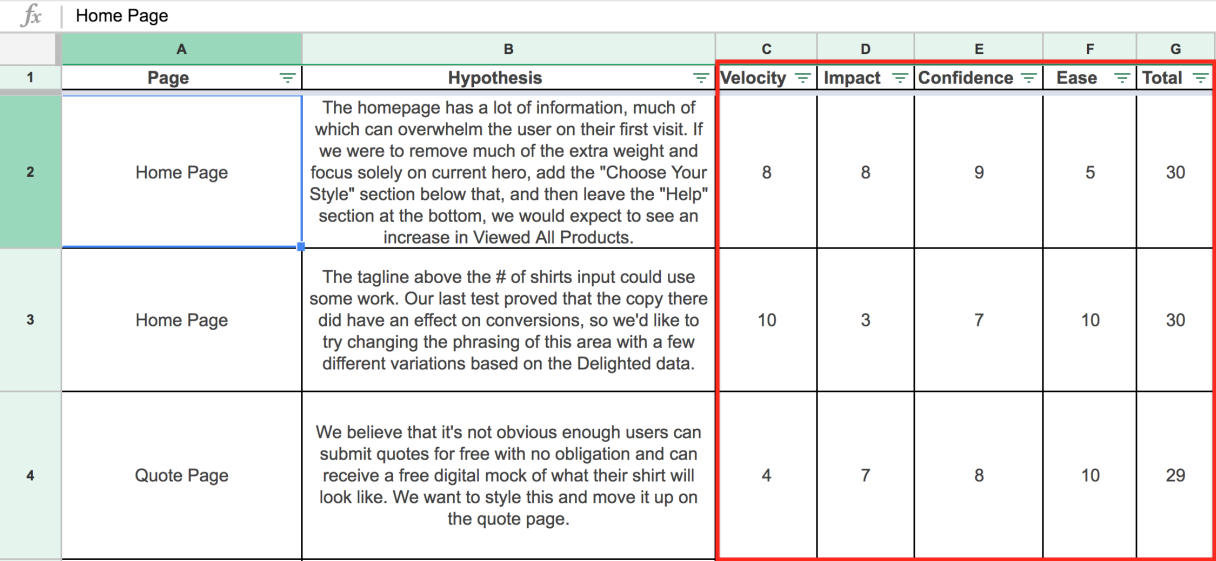

Here’s an overview of what our framework looks like in action, with the VICE section highlighted:

If we zoom in and take a closer look, you can see how we form hypotheses, score each category, and tally up the totals.

Each of these categories represent different factors that you need to account for when running an A/B test. Let’s take a closer look at each one so you can give it as accurate a score as possible.

Velocity

Velocity is the speed at which a test can be run.

Your velocity score should be based on two main factors: (1) how much traffic a page is getting and (2) how noticable a change will be.

How Much Traffic

In general, the more traffic a website is bringing in, the faster a test can be run. More traffic means more data, and more data means it’ll take less time to get a testing sample that’s statistically significant (something we’ll cover later).

When we score for Velocity, we integrate with Google Analytics to get the most accurate number possible (want us to integrate your VICE framework into google, contact us.). Velocity is set based upon the amount of traffic that goes through a page. If a page is getting 50,000 people per week, we can hit statistical significance pretty quickly. If a page is only getting 500 hits per week, it will take longer for you to have enough data to be confident in the results.

Boldness of Change

Reaching statistical significance isn’t just about how many people come to your website. It’s also about how big or noticable the change you are testing is. The less noticable the change, the less likely it is to impact user behavior, so the longer it will take for your test to reach statistical significance. For example, if you update the navigation text to a bold font, that probably won’t increase the amount of clicks you get dramatically—so it will take longer to reach a level where you can be sure that the change is making a difference.

If, however, you make a dramatic change, you can reach statistical significance faster. For example, more users are going to notice a brand new call-to-action button, so you should get enough data to be confident in your results in a shorter amount of time.

Think of it like this: if the test is more dramatic or polarizing to the user’s experience, then you should expect the Velocity to be higher because you’ll see a difference faster.

Other Testing Factors

It’s also important to look at traffic numbers in relation to how many options there are on a given page. The more options (like links) there are, the longer it will generally take to complete the test.

And based on your business or your sales cycle, there are other factors you might need to take into account. For an example, if the sales cycle for one of your products is six weeks long, you won’t be able to complete even a small test in a week.

Try to consider your individual business constraints when scoring Velocity.

Pro Tip: For the statisticians out there, Velocity can be considered the metric for how long it will take to collect an adequate sample, or how long your test will need to run to have sufficient statistical power.

Impact

Impact is how much a change is going to contribute to an improvement in conversions.

When you’re considering what Impact score to give a particular hypothesis, it’s important to first think of your goal. If your goal is to increase the use of a free trial, then any changes on the free trial page would get a higher Impact score. If you want to increase email capture to give your sales team more leads, give a higher score to the hypotheses that are likely to impact that area.

Once you have your goal in mind, there are three other factors to consider:

- The boldness of the change

- The placement of the page in the funnel

- Your analytics data.

Boldness of Change

As we mentioned with Velocity above, the more noticeable a change is to your users, the more likely it is to impact their behavior. Dramatic changes usually have a higher impact score because users are more likely to notice them, and thus they are more likely to affect your conversion rate.

Placement in the Funnel

It’s also important to consider where the page is in the funnel. In general, pages near the top of the funnel will often have more impact than those at the bottom—simply because more users will see them. So, for example, a change on the homepage could get a higher Impact score than a services page.

The other type of page that typically gets a high impact score are checkout and pricing pages. Impact score should be higher for any place where users are trying to give you money. You want to make that process as easy as possible.

Analytics Data

Finally, you’ll want to look at your Analytics data to help you score Impact (need help looking at your analytics data, contact us). If you’re using Google Analytics (and you should be) and Google Ecommerce, you can see a page’s value in your funnel. The higher the page value in Google Analytics, the higher the Impact score should be.

If you want to get really cool, we have a secret VICE framework which we can integrate directly into your Google Analytic account and pull in Velocity and Impact based upon the numbers you have in Google Analytics. Contact us if you want to be amazing.

Confidence

Confidence is how you feel about the chances of a given hypothesis producing favorable results.

The more you know about this type of testing, the more accurate you’ll be when it comes to prescribing confidence ratings ahead of time. That’s because even though A/B testing tends to work on a case-by-case basis, you’ll find that there will be common problems that can often be solved using common solutions. An example of this might be the inclusion of a testimonial on a landing page.

If you’re brand new to testing, don’t overthink it and give it your best guess. Through the process of creating hypotheses and writing down your confidence, you’ll quickly gain a better grasp for which tests are likely to have success.

As you start making predictions, it can be helpful to keep track of how you and your team are doing when it comes to predicting—not as some kind of punishment (A/B testing can be finicky even for an expert), but as a teaching opportunity. Often, you can see patterns in your confidence scores. Maybe someone on your team always overestimates the impact of color, or someone else underestimates the importance of the homepage.

If someone is consistently getting more correct guesses than the rest of the team, it might even be a good idea to give a slightly higher number to the tests they have high confidence on.

Since we’re a company driven by data, we’re building a model that takes past performance into account and automatically reduces or bumps our team’s scores as necessary. That might be a little overboard for most companies, but you can apply the general principle without having to quantify it in a formula. Let us know if you would want to be a beta tester of this new tool. :)

Ease

Ease is the amount of time and the level of technical skill that will be required to make a change.

Whew. We’re almost done. Luckily, Ease is usually the easiest category to score. You just need to consider who you need to make the change and how much time it will take them. Ask yourself if any of the adjustments related to a specific experiment can be made by someone on your team or if you’ll need to bring a developer or designer on board.

A/B testing tools like Optimizely or Visual Website Optimizer make it easy for marketers to make simple changes like removing a button or changing a headline. However, modifying the entire style of a page or even adding new pages to a website can be much more complicated.

Bigger changes like this will probably require the help of a developer and maybe even a copywriter to get the job done properly. Don’t be afraid to loop them in and get a more accurate score here.

Once you’ve determined who you need to execute a given test, consider how long it will take them. If you have to pay a developer for hours and hours of work, or hire an outside contractor, or lose a member of your marketing team for a week, give the test a lower Ease score. If it won’t take much time and you just need one member of your team, give it a higher score.

Validating Success

The VICE framework makes it a lot easier to go through the process of properly A/B testing the various elements of a website without getting overwhelmed. However, the framework can’t protect you from the errors in judgment you might make when trying to determine if an experiment was successful or not.

In some cases, it can look as though you have improved conversions, but over time, the changes may fail to produce a consistent increase in conversions. To protect yourself from such a situation, you need to ensure that your results are considered statistically significant.

If they’re not statistically significant, what you think is an improvement could in fact just be a flash-in-the-pan result. Ideally, your experiments should have a statistical significance of at least 95%. By checking the statistical significance of your results and ensuring that they score above 95%, you can reliably determine if you have a winning variation on your hands and if the changes are worth keeping.

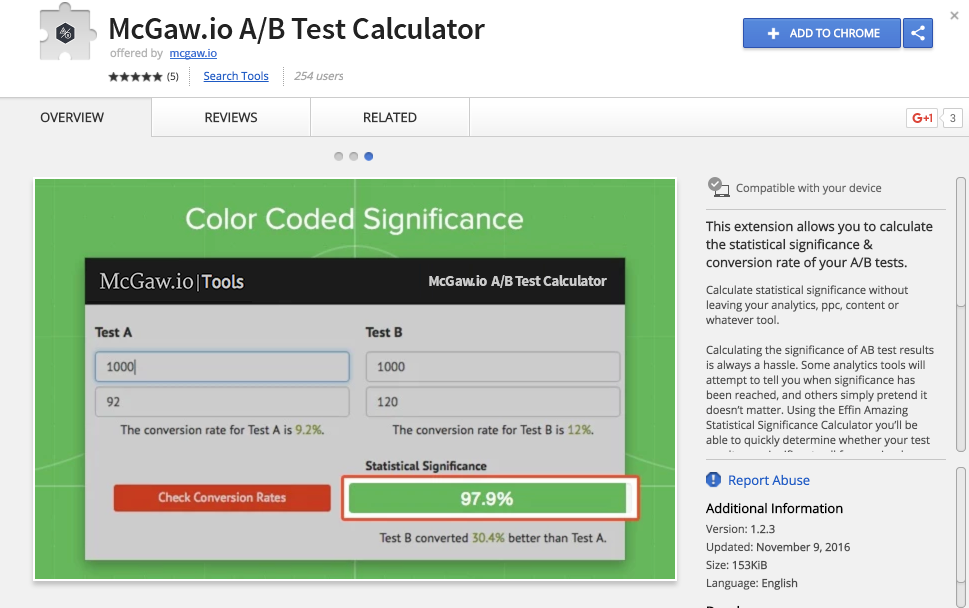

Now, if you’re not mathematically inclined or the concept of statistical significance scares you—don’t worry. We’ve created the Effin A/B Test Calculator. This simple Chrome extension can be used to determine whether or not your results are statistically significant.

It’s very easy to use. All you have to do is put in your traffic and conversion numbers and the tool will show your results. No need to mess around with any complicated formulas!

Of course, you’re free to use other tools and methods. Whatever you do, just make sure that you always check the statistical significance of your results before deciding on a winning variation.

Ensuring Long Term Success

So you picked a winning test that’s statistically significant, you’re done, right? Not quite.

Statistical significance is one test, but you also want to make sure that you’re looking at a representative sample of your data. If you have a generally low traffic site, or you’re trying to increase an uncommon event, it’s not uncommon to find out that a statistically significant result doesn’t have the same effect over the long term. The same can happen if your test runs during a particular seasonal spike in business, or if ad campaigns change mid-test and affect the sort of traffic your site acquires. (Will an e-commerce test between Thanksgiving and Christmas have the same results as if you ran the same test in July?)

To avoid this, run your tests for sufficient time to have confidence that you have an accurate sample of data. Generally speaking, even for very high traffic sites, you’ll want to get at least a couple weeks of data so that you can shake out weekends and holidays. (This is where Velocity comes into play)

Even after you’ve picked a winner from your test and decide to roll it out for everybody, keep an eye on your conversion rates. There are many reasons why your test may pass a test for significance, but may not have the same results long-term. Setting your price at $9.99 worked yesterday, but you may need to set it at $9.97 tomorrow.

Your Turn

Conducting A/B experiments can become very overwhelming, very quickly. Using our VICE approach, however, you can prevent that. Provided you spend the time needed to come up with hypotheses, all you need to do is use the VICE approach to figure out where you should focus.

Once you’ve generated some data related to the test, check its statistical significance. Depending on the result returned, you’ll know if the changes are worth keeping. A/B testing is something that you’ll want to do as soon as possible, so try and take action on what you’ve learned quickly.

Sure, you might not get things right the first time around—but the VICE approach will definitely cut down the odds of failure and wasted effort.

What’s your go-to A/B test? Let us know in the comments below.

Leave a Reply